By Federica Cocco

This article is cross posted on DataDrivenJournalism.net, the Open Knowledge Foundation blog and on the Data Journalism Blog.

Ravensbourne college is an ultramodern cubist design school which abuts the O2 arena on the Greenwich peninsula. It is perhaps an unusual and yet apt setting for journalists to meet.

Members of the Open Knowledge Foundation and the European Journalism Centre saw this as a perfect opportunity to herd a number of prominent journalists and developers who, fuelled by an unlimited supply of mocacchinos, started work on the first Data Journalism Handbook.

The occasion was the yearly Mozilla Festival, which acts as an incubator to many such gatherings. This year the focus was on media, freedom and the web.

The manual aims to address one crucial problem: “There are a lot of useful resources on the web,” Liliana Bounegru of the EJC said, “but they are all scattered in different places. So what we’re trying to do is put everything together and have a comprehensive step-by-step guide”.

In data journalism, most people are self-taught, and many find it hard to keep up-to-date with every tool produced by the industry. “It could be vital having a handbook that really explains to journalists how you can approach data journalism from scratch with no prior knowledge, ” says Caelainn Barr of the Bureau of Investigative Journalism

Friedrich Lindenberg of the OKF believes there is a real urgency in making newsrooms data-literate: “If journalists want to keep up with the information they need to learn coding, and some bits of data analysis and data-slicing techniques. That will make much better journalism and increase accountability.”

And who better than the New York Times’ Interactive Editor Aron Pilhofer, The Guardian Data Blog’s Simon Rogers and others to lead the ambitious efforts?

In charge of sorting the wheat from the chaff, around 40 people joined them in the sixth floor of the college, for a 48 hour session.

The first draft of the handbook should be ready in the coming months, as other contributions from every corner of the web are still working on making an input.

Of course the first data journalism handbook had to be open source. How else would it be able to age gracefully and be relevant in years to come?

Workshops of this sort represent a decisively different break from the past. Aspiring data journalists will know that hands-on sessions are a cut above the usual lectures featuring knowledgeable speakers and PowerPoint presentations. Discussing the topic and citing examples is not enough. After all, if you give a man a fish you have fed him for a day. But if you teach a man ho w to fish, you have him fed for a lifetime.

Jonathan Gray concurs: “Rather than just provide examples of things that have been done with data, we want to make it easier for journalists to understand what data is available, what tools they can use to work with data, how they can visualise data sets and how they can integrate that with the existing workflows of their news organisations.”

At the event itself, after a brief introduction, the crowd split into five groups and began collaborating on each chapter of the handbook. Some were there to instill knowledge, others were there to absorb and ask questions.

“I like the fact that everyone is bringing a different skillset to the table, and we’re all challenging each other”, one participant said.

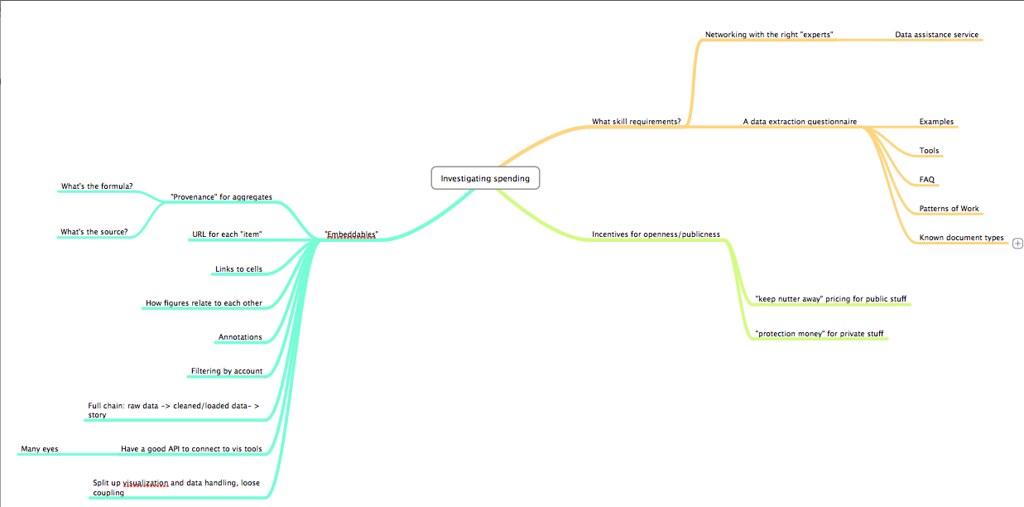

Francis Irving, CEO of ScraperWiki, led the session on new methods of data acquisitions. He believes the collaboration between journalists, programmers, developers and designers, though crucial, can generate a culture clash: “When working with data, there’s a communication question, how do you convey what you need to someone more technical and how do they then use that to find it in a way that’s useful.”

“A project like this is quite necessary,” noted Pilhofer, “It’s kind of surprising someone hasn’t tried to do this until now.”

The free e-book will be downloadable from the European Journalism Centre’s DataDrivenJournalism.net/handbook in the coming months. If you want to follow our progress or contribute to the handbook you can get in touch via the data journalism mailing list, the Twitter hashtags #ddj and #ddjbook, or email bounegru@ejc.net.

Watch here the full video report from the Data Journalism Handbook session at the Mozilla Festival, 4-6 November in London.

The organisers would like to thank everyone who is contributing to the handbook for their input and to Kate Hudson for the beautiful graphics.

About the author: Federica Cocco is a freelance journalist and the former editor of Owni.eu, a data-driven investigative journalism site based in Paris. She has also worked with Wired, Channel 4 and the Guardian.