source: Joel Gunter from Journalism.co.uk

The open data movement, with the US and UK governments to the fore, is putting a vast and unprecedented quantity of republishable public data on the web. This information tsunami requires organisation, interpretation and elaboration by the media if anything eye-catching is to be made of it.

Experts gathered at the International Journalism Festival in Perugia last week to discuss what journalistic skills are required for data journalism.

Jonathan Gray, community coordinator for the Open Knowledge Foundation, spoke on an open data panel about the usability of data. “The key term in open data is ‘re-use’,” he told Joel Gunter from Journalism.co.uk.

Government data has been available online for years but locked up under an all rights reserved licence or a confusing mixture of different terms and conditions.

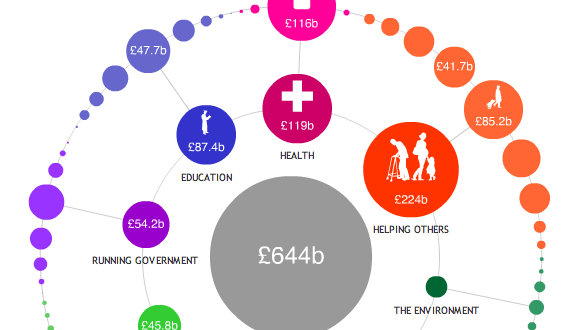

The Open Knowledge Foundation finds beneficial ways to apply that data in projects such as Where Does My Money Go which analyses data about UK public spending. “It is about giving people literacy with public information,” Gray said.

The key is allowing a lot more people to understand complex information quickly.

Along with its visualisation and analysis projects, the Open Knowledge Foundation has established opendefinition.org, which provides criteria for openness in relation to data, content and software services, and opendatasearch.org, which is aggregating open data sets from around the world.

“Tools so good that they are invisible. This is what the open data movement needs”, Gray said.

Some of the Google tools that millions use everyday are simple, effective open tools that we turn to without thinking, that are “so good we don’t even know that they are there”, he added.

Countries such as Itlay and France are very enthusiastic about the future of open data. Georgia has launched its own open data portal, opendata.ge.

The US with data.gov, spend £34 million a year maintaining that various open data sites. Others are cheap by comparison, with the UK’s data.gov.uk reportedly costing £250,000 to set up.