This post was written by Mimi Rojanasakul on Infosthetics.com. She is an artist and designer based in New York, currently pursuing her MFA in Communications Design at Pratt Institute. Say hello or follow her@mimiosity.

The 2011 Strata Conference in New York City kicked off on Thursday with a brief introduction byO’Reilly’s own Ed Dumbill. He ventures a bold assessment of the present social condition and how data science plays into it: the growth of our networks, government, and information feel as if they are slipping out of our control, evolving like a living organism. Despite this, Dumbill is optimistic, placing the hope to navigate this new “synthetic world” on the emerging role of the data scientist. And so sets the stage for the speakers to follow.

The first keynote comes from Rachel Sterne, New York City’s first Chief Digital Officer and a who’s who in the digital media world since her early twenties. Though there was some of the expected bureaucratic language, examples of what was being done with the city’s open data showed very real progress being made in making parts of government more accessible and allowing the public to engage more directly in their community. New York City is uniquely situated for a project of this nature, and the individual citizens are a key factor – densely packed in and cheerfully tagging, tweeting, and looking for someone to share their thoughts with (or perhaps gripe to). Through NYC Digital’s app-building competitions, hackathons, and more accessible web presence, New Yorkers are able to compose their own useful narratives or tools – from finding parking to restaurants on the verge of closing from health code violations. By the people and for the people — or at least an encouraging start.

[ New York City evacuation zone map was shared with other parties to protect against heavy internet traffic taking down any individual site ]

[ New York City evacuation zone map was shared with other parties to protect against heavy internet traffic taking down any individual site ]

On matters of a completely different spatial scale, we turn to Jon Jenkins of NASA’s SETI Institute and Co-Investigator of the Kepler mission. The Kepler satellite, launched in July of 2009, boasts a 100,000 pixel camera that checks for tiny planets blocking a star’s luminescence for over 145,000 stars in its fixed gaze, snapping a photo every 30 minutes with bated breath for potential candidates. As of February 2011, over 1200 planetary candidates were identified. Despite the cosmic scale of Kepler’s investigations, Jenkins’ communicates with a Carl-Sagan-like sense of wonder that is difficult not to get swept up in. Video renderings of distant solar system fly-bys show worlds not unlike our own, a reminder that the motives for some of our greatest accomplishments come from an innate, irrepressible curiosity.

[ Photo and graphic representation of Kepler’s field of vision ]

[ Photo and graphic representation of Kepler’s field of vision ]

[ Recently discovered planet with two suns ]

[ Recently discovered planet with two suns ]

Amazon’s John Rauser begins his own talk with a different story about staring at the sky. It’s 1750, Germany, and Tobias Mayer is about to discover the libration (wobble) in the Moon. Rauser argues that it was Mayer’s combination of “engineering sense” and mathematic abilities that allowed him to make the first baby steps toward establishing what we now know as data science. While an earlier presenter,Randy Lea of Teradata, focused mostly on the technological advancements made in the field of big data analytics, Rauser emphasized the human characteristics demanded for this career. Along with the more obvious need for programming fluency and applied math, he cites writing and communication as the first major difference in mediocracy and excellence, along with a strong, self-critical skepticism and passionate curiosity. These last three virtues could just as easily be transplanted into any other field, and judging from the applause and approving tweets, the relevancy clearly struck a nerve with the crowd.

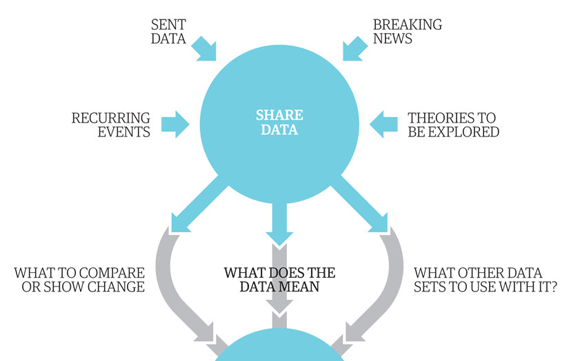

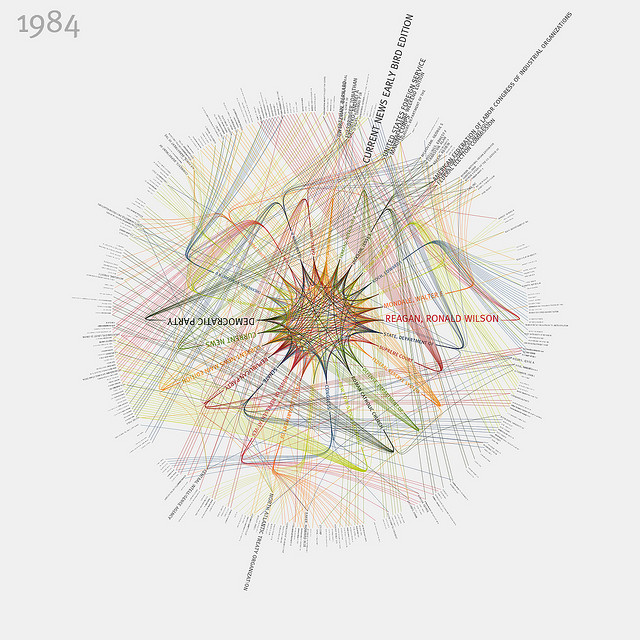

From a design perspective, the obvious continuation to so many of these presentations was the successful visual communication of all this data. My aesthetic cravings immediately subside when Jer Thorp, current Data Artist in Residence at the New York Times, takes the stage. His presentation walks us through a commission to design an algorithm for Michael Arad’s 9/11 memorial that would place names according to the victims’ relationships to one another. Though clustering the 2900 names and 1400 adjacency requests was at first an issue of optimization-by-algorithm, manual typographic layout and human judgement was still necessary to achieve the aesthetic perfection needed. Thorp also made a great point about visualizations not only being an end-product, but a valuable part of the creative process earlier on.

[ Early visualization of density of relationships ]

[ Early visualization of density of relationships ]

[vimeo 23444105]

WTC Names Arrangement Tool from blprnt on Vimeo.

[ Processing tool built to arrange the name clusters by algorithm and by hand ]

To be honest, I was skeptical at first of the decision to cluster the names by association rather than simple alphabetization — an unnecessary gimmick for what should be a uncomplicated, moving experience. Part of the power of the Vietnam Memorial was its expression of the enormous magnitude of human casualties with simple typographics, while its logical organization provided map and key for those purposefully looking for one name. But as Thorp explained these adjacencies in context, the beauty of the reasoning began to unfold. First, it is a matter of new ways of understanding. We do not browse, we search. And collecting and visualizing our identity based on our social networks has become second nature. It has the potential to tell stories about each individual’s lives that go beyond the individual experience, creating a physical and imagined space to extend this unifying connectivity.

Overall, it was a humanizing first experience with professional “big data.” Coming from a background in art and design, you could say I had some apprehensions about my ability to understand the myriad of technical disciplines represented at Strata. Despite this, the experience so far has been of unexpected delights — a keenly curated look at where we are with data today.

I admit this first post was low on data visualizations, but there were plenty of interface and graphics talks in the afternoon sessions to share in the next posts. Stay tuned!

[ New York City evacuation zone map was shared with other parties to protect against heavy internet traffic taking down any individual site ]

[ New York City evacuation zone map was shared with other parties to protect against heavy internet traffic taking down any individual site ] [ Photo and graphic representation of Kepler’s field of vision ]

[ Photo and graphic representation of Kepler’s field of vision ] [ Recently discovered planet with two suns ]

[ Recently discovered planet with two suns ] [ Early visualization of density of relationships ]

[ Early visualization of density of relationships ]